“A few little things at a time.”

When it comes to tomorrow’s mobility, autonomous driving is a game-changer. State-of-the-art technology will soon turn active drivers into passive travelers—a relaxed coexistence that calls for a certain degree of trust and lends emotional security. So looking ahead, a key question is: The connection between man and machine, how does that actually work? MIT Media Lab researcher Kate Darling on the strange emotional bonds humans develop with robots and how we can be tricked by them.

Steffan Heuer (interview) & Katharina Poblotzki (photo)

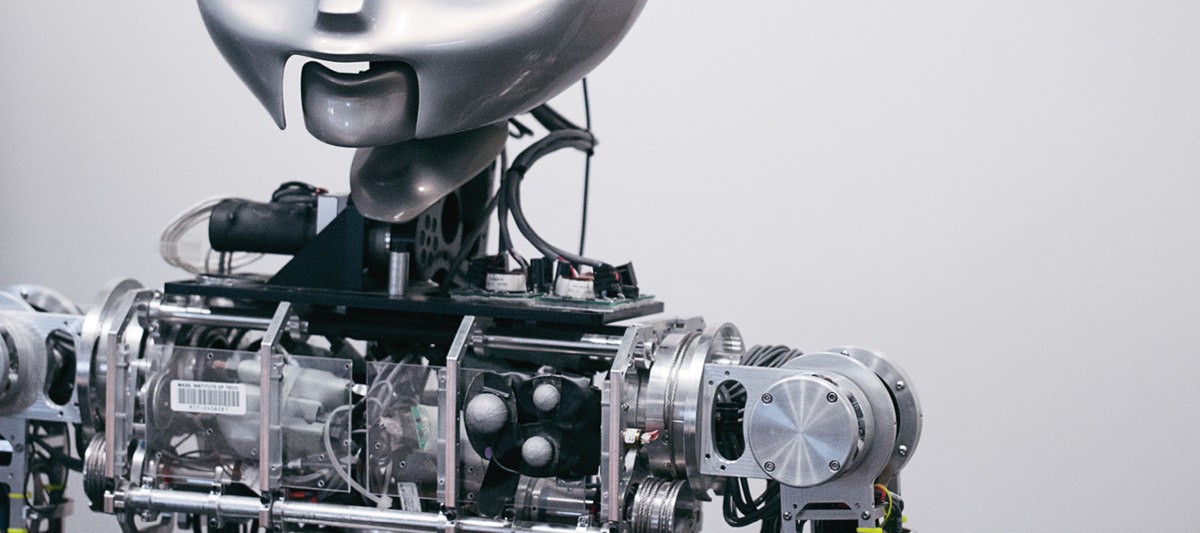

Born in America and raised in Switzerland, Kate Darling is one of the leading experts in the field of robot ethics. She has been teaching and researching at the Massachusetts Institute of Technology Media Laboratory since 2011.

Thinking ahead: Artificial intelligence (AI) will fundamentally change our mobility, working environments and lives. Which is why the beyond initiative focuses on analyzing and shaping the ethical, legal and social aspects of autonomous driving and the future of work in the age of artificial intelligence. The people at beyond feel strongly that shaping the AI transformation calls for business, the scientific community, politicians and society to work together. As a first step, the beyond initiative has established an interdisciplinary network of international AI experts. Philosophers and psychologists collaborate with software engineers, start-up entrepreneurs and legal experts. And Kate Darling is part of the team.

In other words, our behavior toward humans predicts how we will treat robots. Isn’t that a great guide to designing robots, to give them personalities so people treat them well?

That depends on what the robot is being used for. There are certainly use cases where you want people to feel empathy for a robot, to like it and treat it as a social being—for example, in health care or therapy settings, or as replacements for animal therapy. Then there are cases where you don’t want people to treat the robot like a living thing, such as in the military when they’re working with robots like tools. It can be inefficient or dangerous to bond emotionally with a tool.

Our daily lives are increasingly filled with systems and assistants that have names and even pretend to be a person when they talk. Is that part of the attempt to trick our brains?

Yes, it is, although we’re still trying to figure out the right design. If you try to do too much, it will disappoint expectations and frustrate people. Particularly in the call center setting, people are already frustrated. You don’t want to annoy them even more. So getting that balance right is really difficult. On a rational level, we know we’re talking to a machine, and that can be a reason to get aggressive. It can have amazing consequences. I was talking to a large company that uses a chatbot to onboard new employees. New hires can ask it questions such as, “What are my benefits? How do I find this and that?” They noticed that some people were verbally really abusive toward the chatbot and asked me if they should beworried or report them to Human Resource Management. I think that’s a little bit extreme, but the truth is we don’t know what it means when people are particularly aggressive to a software. It might actually be a good thing to use chatbots to vent and get out your aggression. Or it may encourage that behavior generally.

What advice did you give them?

I told them we don’t know. Increasingly, we will interact with these systems through natural language, which makes them appear like social actors. My guess is that as the systems are better able to mimic a real conversation, we will naturally fall into a pattern of being nice to them. Ironically, some parents are already worried about the opposite—that Amazon’s Alexa teaches their children bad behavior because it doesn’t require “please” or “thank you” when you want something.

Doesn’t your research imply that we will become more polite over time, as those systems improve?

What little research we have right now indicates that polite people will be polite to their robot, just as they’re polite to humans, while people who are impolite will be impolite to their robot. What we don’t know is whether we can change people’s behaviors by teaching them habits through robots, either intentionally or unintentionally. So if you have a robot that doesn’t encourage you to be polite, and you get into the habit of being rude toward it, could that make you rude toward other people? Or will you be able to distinguish mentally between robot and human? We’ve had similar questions about whether playing violent video games makes you violent. That has never been conclusively answered because it’s very difficult to research.

Does the form make a difference—whether, for instance, we’re talking to an adaptive voice-controlled loudspeaker in the kitchen or an assistant on a smartphone or in the car?

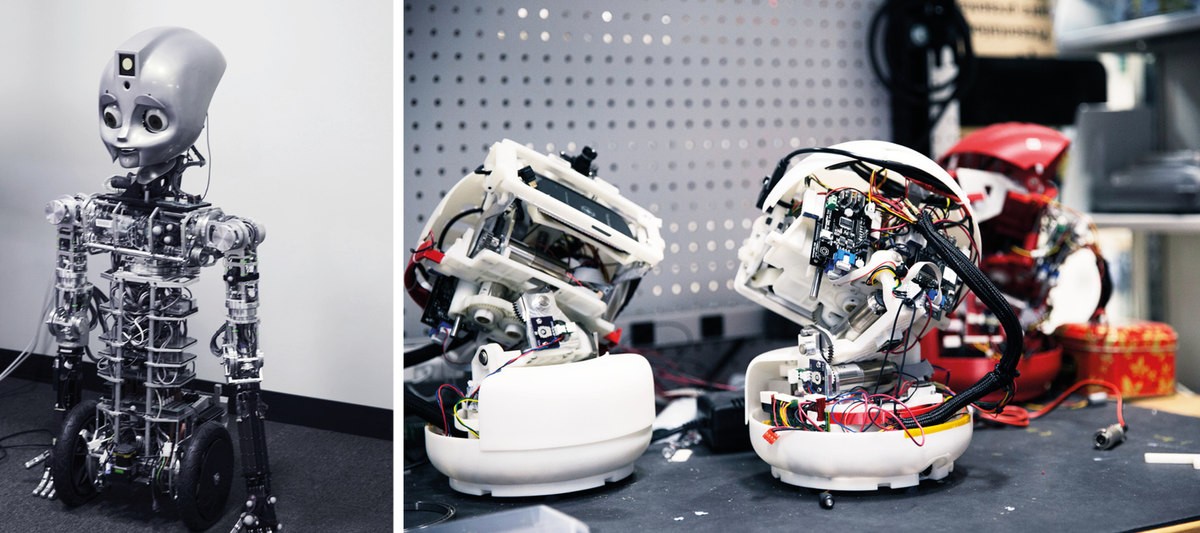

There’s certainly a spectrum. Research shows we treat something more like a social actor if it’s a physical object, as opposed to something that’s on a screen or disembodied. The biggest impact comes from putting such a system into a robot with some lifelike shape. If you do it right, you could get people to engage with such a system in a slightly different way. Take a navigation system. It might be a good idea to make it a little bit more human. But it’s important to get the balance right. If you try to do too muchand fail to meet people’s expectations, they won’t like it. Sometimes little things make a difference—even just giving the car or the system a name helps people relate to it.

We are entering a world where everything is connected. Can you outline how you see the world in a few years’ time? Will we be able to differentiate—that is, bark orders at the assistant in the car and then turnto our passenger and be nice because that’s what humans do?

We’re able to switch back and forth like that because we are mostly dealing with humans and the technology is still really bad. Voice recognition doesn’t work all the time and doesn’t fool us. You can have a conversation with Alexa for maybe 20 seconds before you realize she has limitations. But that will get better. The interesting thing to me is what happens as the technology starts improving and we start treating it more and more like a social actor.

Do you see any downsides to treating machines like living objects?

Robots are made by people who can intentionally design them to appeal to us. These robots aren’t just things that exist in the world; we create them, and sometimes the incentives of companies who build them don’t align with the public interest. There are a lot of privacy implications. Companies might want to use social interactions with robots to gather more data than people would otherwise be willing to reveal, and there may be other ways to manipulate people using this technology, say by getting them to buy products and services. Especially because it’s all coming into our intimate spaces—our lives and our homes. And these systems may also shape people’s behavior.

Can you give us an example?

The design of these devices impacts whether people can perceive their privacy being violated or not. For example, people don’t like the idea of drones flying over their backyard, but they don’t seem to mind the high-resolution satellite imaging systems that can already see every detail of their backyards. The physicality of a robot—whether it has eyes— can make people feel watched and can change their behavior in their homes. It depends on whether the camera is visible to people or not. If it’s an invisible camera, people will probably forget about it, but if it’s there and you can see it blinking, watching you, that’s a very different experience.

Are you worried that we will have sensors and cameras everywhere we turn?

It is creepy, but one thing is important to remember: We don’t have a good way to connect all of these systems, which is a barrier to their usefulness. In theory, I think, smart cities can exist, but I see a lot of hurdles to that technology becoming practical very soon. Even here at the MIT Media Lab, our robots are always broken. And when you’re dealing with cities, it’s important to get it right. You can’t have technology that malfunctions half the time. It’s not that we won’t get there; it’s just that we’re not on the cusp of achieving it. It will be gradual—a few little things at a time.

What’s your biggest worry about your son growing up in a world of robots, AI and sensors?

My biggest concern is the same one parents have nowadays with tablets and apps. Developers want to give you flashy things to keep you interested, and we’re not sure what that does to children’s attention spans. I think robots, smart systems and AI can also be used for that type of functionality. If all your toys are trying to get your attention and want you to interact with them, we don’t really know what the effects are.